Human Eye-Gaze Tracking Using Webcam Technology

In 2020, I spearheaded a groundbreaking project on human eye-gaze tracking using webcam technology. This method, leveraging advanced appearance-based techniques, revolutionized how we predict gaze directions with impressive accuracy, setting new standards in digital accessibility.

In early 2020, I joined an innovative startup in Paris, diving into the world of computer vision. Our focus was revolutionizing user interaction through eye-gaze tracking technology, employing a standard laptop camera.

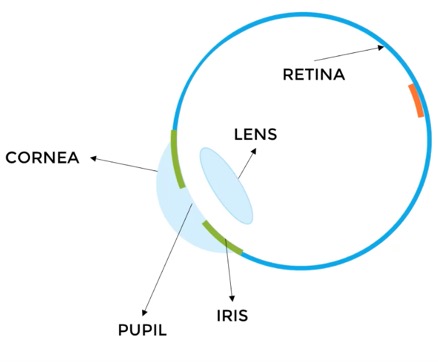

Understanding the Human Eye

Just like a camera, the human eye features several key components:

- Lens: Directs light rays onto the retina.

- Iris: Controls the pupil’s size.

- Pupil: Allows light to enter the eye.

- Cornea: Transparent front layer of the eye.

- Retina: Captures the light, turning it into visual signals.

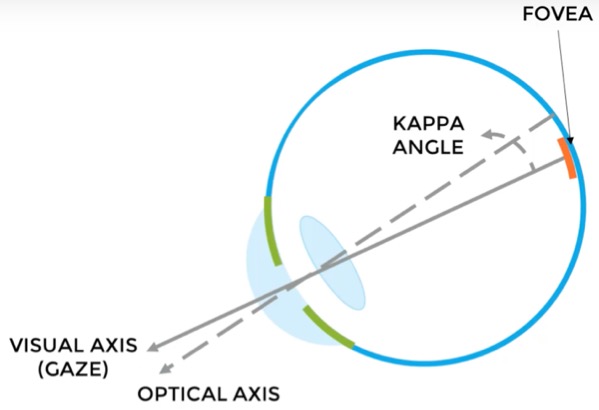

The Challenge of Eye-Gaze Detection

Eye-gaze tracking technology encounters a unique challenge: the optical axis differs from the visual gaze axis. This discrepancy, known as the kappa angle, varies among individuals, necessitating personalized calibration for accurate tracking.

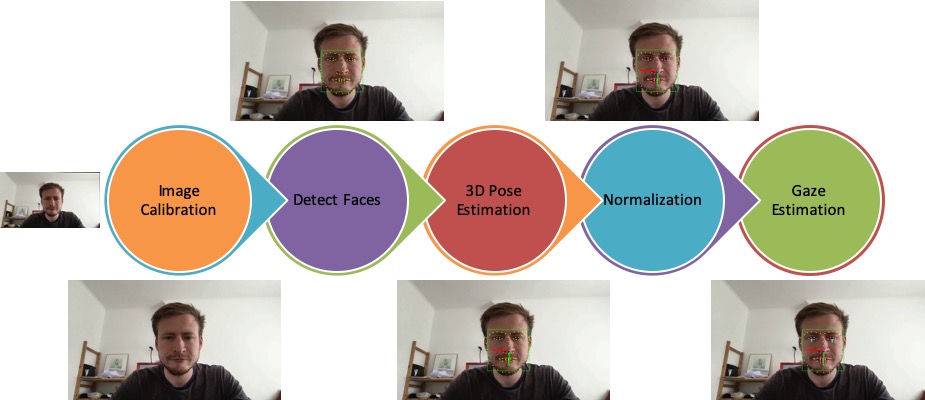

My Approach: Appearance-Based Gaze Tracking

I employed appearance-based methods, heavily relying on the pioneering work by Zhang et al., which utilized images of the eyes or the full face to accurately predict gaze angles. Our process involved:

- Calibrating and undistorting the image.

- Detecting faces with dlib’s face-detector.

- Estimating head pose and fitting a 3D face landmark model.

- Extracting, normalizing, and combining eye images into a two-channel image for the model.

- Predicting gaze vectors (pitch and yaw) for accurate tracking.

Enhancing Performance

To improve stability and accuracy, I implemented averaging techniques over facial landmarks and predicted gaze vectors. This approach significantly reduced errors and enhanced system reliability.

Results and Insights

Our findings, documented in detailed performance metrics, showcase the potential for further advancements in gaze tracking technology. The analysis of mean absolute error (MAE) across various distances offers valuable insights for model improvement.

Explore More on My GitHub

For a deeper dive into this project and more of my work in computer vision, visit my GitHub page.