Innovations in Video and Image Content Understanding for Social Media Platforms

In 2019, we pioneered advanced content moderation technologies, utilizing Yolov3 and darknet for real-time object detection and addressing challenges in video-action detection, speech transcription, and automatic text summarization to enhance user experience and ad targeting on social platforms.

During a transformative journey in late summer 2019, a chance encounter led to a groundbreaking project aimed at redefining content understanding on social media platforms akin to Instagram and TikTok. Our mission was clear: to filter content for sensitive elements like nudity and violence effectively and to refine ad targeting through cutting-edge machine learning techniques.

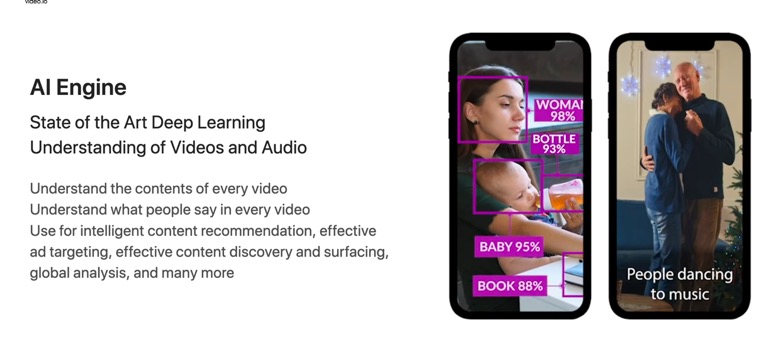

Advanced Object Detection

Leveraging pjreddie’s darknet and the Yolov3 model, we crafted a high-speed image and video classification pipeline. This system identified predominant objects within media, outputting results in a structured JSON format, surpassing traditional methods in both speed and accuracy.

Pioneering Video-Action Detection

Action detection in videos posed a unique set of challenges, from vast parameter spaces to scant training data. Our solution involved integrating NVIDIA-STEP, a model that excels in interpreting the complex temporal dynamics of video content, setting a new standard for action recognition.

Revolutionizing Speech Transcription

We tapped into the prowess of Google’s Speech-to-Text API, transforming audio extracted from videos into coherent transcriptions. This process not only enriched content understanding but also paved the way for more contextual ad placements and user interactions.

Innovating with Automatic Text Summarization

Exploring both abstractive and extractive summarization techniques allowed us to condense the transcribed text into digestible summaries. This dual approach highlighted essential information and generated new, concise sentences, mirroring a human-like understanding of the content.

Overcoming Challenges

The venture into video-action detection marked our project’s most formidable challenge, navigating through the latest research and parallel processing requirements. By employing multiprocessing and strategic design, we synchronized the analysis of audio and visual elements, achieving unprecedented efficiency and accuracy in content understanding.

For an in-depth look at our journey and technological breakthroughs, visit our project page.