Advanced Computer Vision for Accurate Position Estimation

Explore how we achieved groundbreaking improvements in inside-out positioning with computer vision technologies at Fraunhofer IIS in 2018, significantly enhancing image processing and simplifying CNN architectures for unparalleled accuracy.

During my tenure at Fraunhofer IIS in Nürnberg in late 2018, I tackled the challenging task of inside-out positioning for autonomous systems using RGB cameras, a crucial technology for navigation and spatial awareness in robotics and autonomous vehicles. Learn more about inside-out positioning.

Enhancing Warehouse Navigation with Computer Vision

Fraunhofer’s groundbreaking warehouse dataset includes absolute positions and 360° imagery, serving as the foundation for our experiments in enhancing autonomous navigation through advanced image processing and CNN architectures.

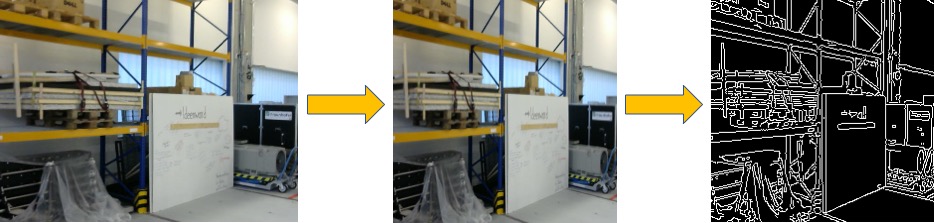

Optimizing Image Processing for Precision

Following standard pre-processing protocols, we initially faced challenges in retaining critical image details. A shift in approach from center-cropping to edge detection and image scaling (224x224) preserved valuable contextual information, drastically improving our model’s learning efficiency.

Introducing ‘Edges’: A Game-Changer in Image Analysis

My innovation involved foregoing traditional mean image reduction in favor of edge detection, enhancing the CNN model’s accuracy by focusing on structural details critical for precise positioning.

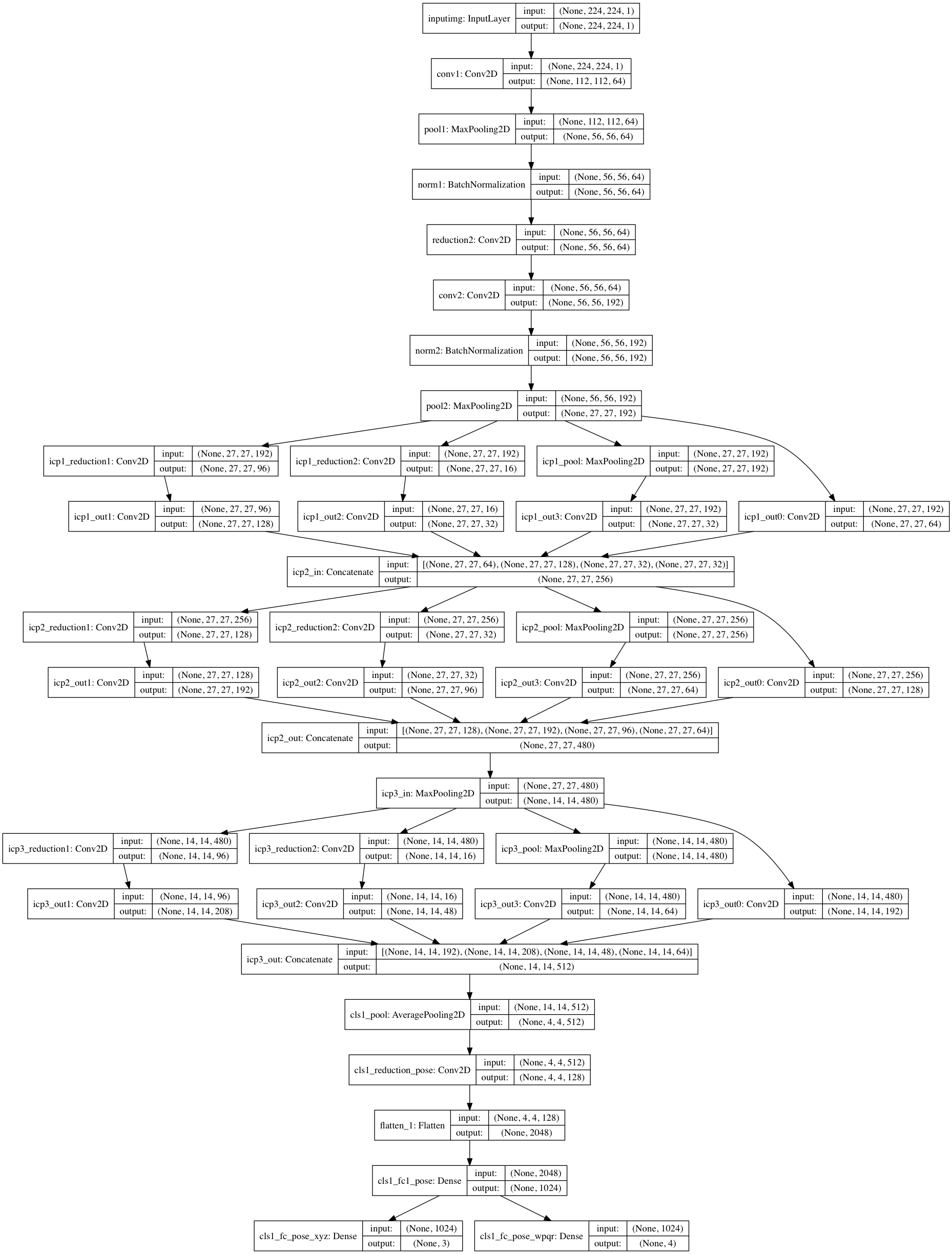

Streamlining CNN Architecture for Better Performance

Our simplified CNN model, inspired by but less complex than GoogleNet’s architecture, proved more effective for our specific use case, requiring fewer parameters and offering improved performance.

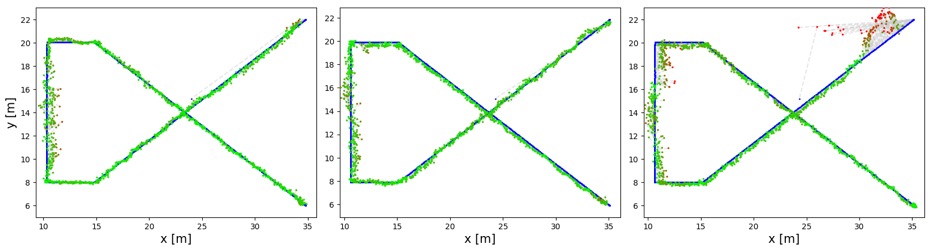

Breakthrough Results in Position Estimation

Our results demonstrated significant improvements across key metrics, including Mean Absolute Error (MAE), Circular Error Probable (CEP), and the 95% confidence interval (CE95), showcasing the efficacy of our streamlined approach.

The comparative analysis highlights the superiority of our ‘smallNet’ system in various operational setups, marking a substantial advancement in computer vision-based positioning technologies.

For a deeper dive into the project and access to our codebase, visit our GitHub repository.